MLOps: The Engine Behind Scalable, Production-Ready Machine Learning

- Mohammed Juyel Haque

- Jul 19

- 2 min read

🧠 Introduction

In the world of machine learning, building a model is just the beginning. The real challenge lies in operationalizing that model — taking it from a Jupyter notebook into a real-world system where it serves predictions reliably, scales under demand, and adapts over time.

Welcome to MLOps — a new-age discipline combining Machine Learning with DevOps practices to bridge the gap between experimentation and production.

🔍 What is MLOps?

MLOps (short for Machine Learning Operations) is a set of best practices and tools that streamline the deployment, monitoring, retraining, and governance of machine learning models.

Just like DevOps revolutionized software delivery, MLOps is revolutionizing ML delivery by introducing:

Continuous Integration and Delivery (CI/CD)

Automation

Versioning

Monitoring

Collaboration between data scientists and engineers

🧩 Core Components of MLOps

Let’s break down a typical MLOps workflow:

1️⃣ Data & Code Versioning

Tools: Git, DVC, LakeFS

Ensure reproducibility by versioning datasets, code, and model weights.

2️⃣ Experiment Tracking

Tools: MLflow, Weights & Biases, Neptune

Log metrics, parameters, and artifacts to compare multiple experiments.

3️⃣ Pipeline Automation

Tools: Airflow, Kubeflow, ZenML

Automate the flow: from data preprocessing to model training and deployment.

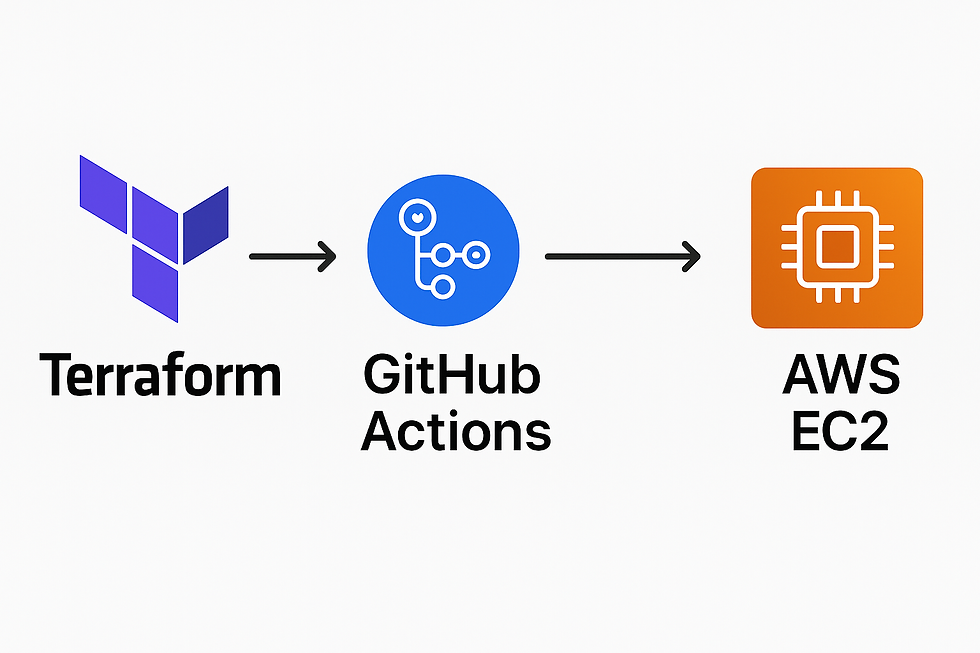

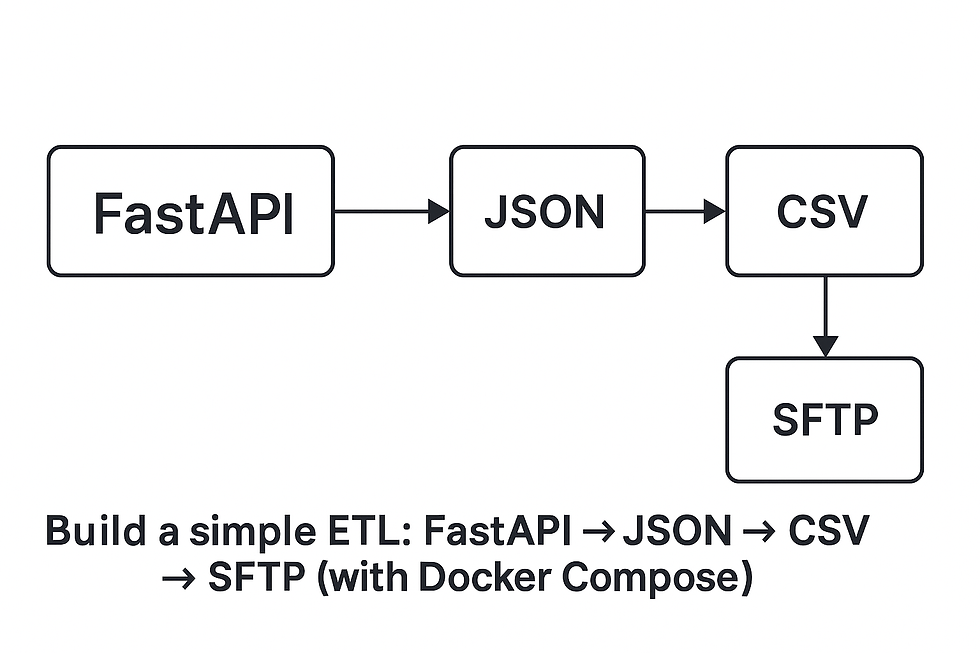

4️⃣ Model Deployment

Tools: FastAPI, BentoML, Flask, Docker, Kubernetes

Package models into APIs and serve them in real-time or batch mode.

5️⃣ Monitoring & Feedback Loops

Tools: Prometheus, Grafana, Evidently AI

Track performance, detect drift, and trigger retraining if necessary.

🔥 2025 MLOps Trends to Watch

🔁 LLMOps: Managing Large Language Models

Specialized workflows for LLMs (OpenAI, LLaMA, Cohere)

Focus on prompt testing, fine-tuning, cost optimization, and hallucination control

☁️ Serverless MLOps

Reduce infra complexity using AWS Lambda, Google Cloud Functions, and Vertex AI Pipelines

⚖️ Responsible AI & Model Governance

Tools like Model Cards, Explainable AI, and Bias Detection

Compliance with regulations like GDPR and HIPAA

📱 Edge MLOps

Deploying optimized models to mobile or edge devices using ONNX, TFLite, and TensorRT

💼 Managed MLOps Platforms

Examples:

AWS SageMaker Pipelines

Azure Machine Learning Studio

GCP Vertex AI

Hugging Face AutoTrain

🛠️ Example MLOps Tech Stack: Customer Churn Prediction

Stage | Tools & Platforms |

Data Ingestion | Apache Kafka, Airbyte |

Data Storage | Delta Lake, Amazon S3 |

Feature Engineering | Pandas, Featuretools, Feast |

Experiment Tracking | MLflow |

Model Training | Scikit-learn / XGBoost |

Model Registry | MLflow Model Registry |

Model Deployment | FastAPI + Docker + Kubernetes |

Monitoring | Evidently AI, Prometheus, Grafana |

💡 Real-World Benefits of MLOps

🚀 Faster Time-to-Market: Automate ML pipeline to reduce deployment time.

🔄 Consistency: From training to serving, pipelines remain repeatable and reproducible.

🛡️ Robust Governance: Track every version, model, and dataset for audit and compliance.

🔍 Improved Monitoring: Detect model drift and trigger retraining automatically.

📣 Final Thoughts

MLOps is no longer a "nice to have." It's a critical capability for any organization aiming to scale AI. As ML becomes an integral part of business applications, robust pipelines, model lifecycle management, and continuous monitoring are essential.

Whether you're a data scientist, DevOps engineer, or cloud architect — investing time in mastering MLOps will future-proof your ML career.

Comments